RGB

RGB Frame Camera Overview

An RGB camera captures red, green, and blue wavelength light to create color images of the image scene. Full-frame RGB cameras, such as the Sony ILX-LR1, utilize large sensors designed to capture as much light and detail as possible. This can help combat the effects of slightly dimmer lighting conditions and allows for much greater image detail in good lighting compared to other types of cameras.

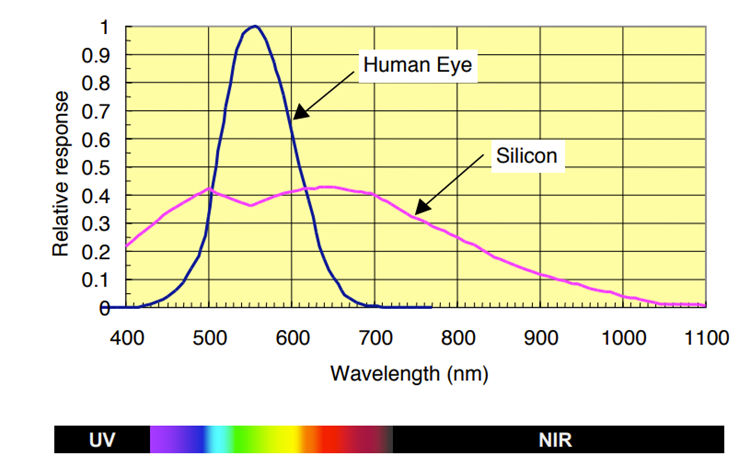

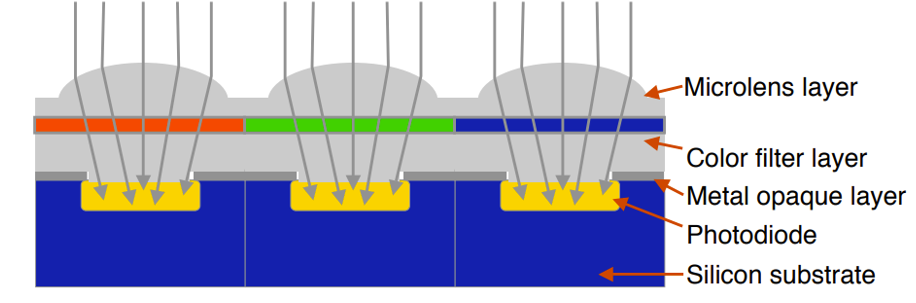

RGB cameras are sensitive to all light in the 350 - 1050nm wavelength spectrum. The human eye primarily sees between 450 - 650nm wavelength spectrum. This does mean an RGB sensor can see greater wavelengths than the human eye. However these cameras filter the light into red, green, and blue wavelengths for constructing the image that is produced.

Data collection considerations

RGB imagery has few limitations, however the following characteristics can have negative implications on data processing and orthomosaic quality:

Scene Homogeneity

Areas with large homogeneous feature sets, while not any more difficult to capture photos of, do cause issues when generating a mosaic from those images. Areas with a homogeneous feature set include scenes such as bodies of water, deserts, late-season agricultural fields, and large open grass fields. Unique features between images are few and far between in largely homogeneous datasets, causing poor feature matching potential in post-processing. This will often lead to incomplete orthomosaics.

Poor Illumination

While full frame cameras with large sensors can perform a bit better in low light conditions than other types of cameras, that doesn't make them perfect. Less light being absorbed by the camera sensor leads to less details in the image captured. Additionally, in automated capture modes, exposure compensation and ISO increases can lead to greater noise.

Imagery with large shadows cast on the subject can equally have issues when trying to process the images into an orthomosaic. These shaded regions can be be from tall nearby structures or objects, and/or low sun angles. Low sun angles will cause long shadows. This applies to both early and late flights in the Summer, and most flights during the Winter.

High Reflectivity

Highly reflective surfaces can cause issues in processing your images as either the reflection of light rays can obscure the objects in the image or can reflect nearby objects and structures meaning there is no consistent pattern for the processing software to detect in that specific area. Reflective surfaces to try and avoid include water, glass, and shiny plastics or metals.

Lens Options

Many RGB Frame Camera's have different lens choices or interchangeable lenses. Your choice of lens can impact data quality in various ways:

Ground Sampling Distance (GSD) and Field of View (FOV) vary based on lens options: Your camera's lens directly impacts both data characteristics. This impacts overlap and flight line spacing, to ensure you have enough overlap for orthomosaic generation. The lens you have also determines what GSD you will have at a given flight altitude.

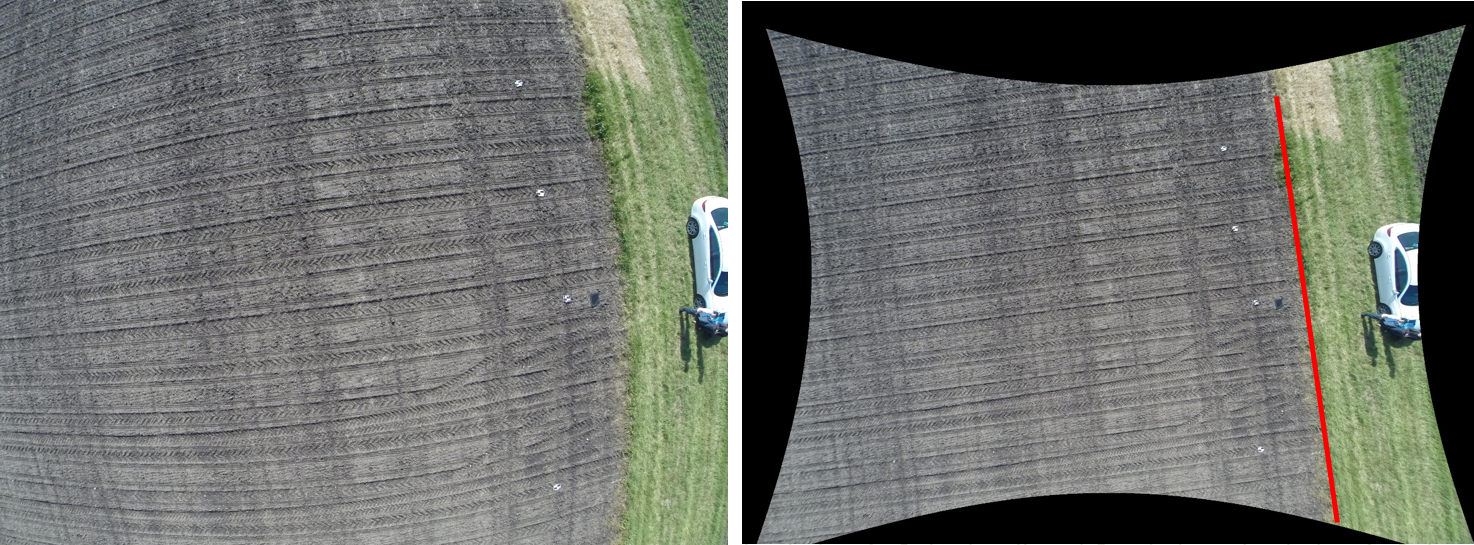

Lower quality lenses may add additional distortion: With interchangeable lens options you need to verify that you are using a high quality lens to get the most out of your camera. You can think of this like wearing any type of glasses. You could have good vision, but the lenses in a pair of glasses can warp your vision or tint the color you see. Having a poor lens can cause issues in clarity, or distortion of the images captured.

Changing lenses requires a new boresight calibration for each lens: If you want to use a different lens with your RGB frame camera, you will need to have a new boresight calibration conducted to get a new calibration file for use in data processing. You then can switch the lenses on your camera freely between the lenses you have calibrations for. You do not need a new calibration every time you take a lens on or off. Once you do a calibration once, you just need to make sure you use the right calibration file, based off the lens you used for data collection, in processing the data.

Shutter Type

The two main types of shutter mechanisms utilized in airborne RGB cameras are rolling and global shutter. With a rolling shutter, pixels are captured across the sensor quickly one row at a time by deploying a series of small blades close in front of the sensor array to prevent any additional light reception from the pixels that have been captured. While these shutters move very quickly, they do not close instantaneously. For most applications, this will not impact data quality. However, as aircraft speed increases, distortion or blurring can become apparent.

A global shutter captures all pixels to the sensor simultaneously. This is accomplished by turning on and capturing data to all pixels of the sensor simultaneously. This type of shutter can reduce the potential for distortion or blurring caused by movement of the camera since all pixels are captured at the same location and point in time. The possible negatives of this type of sensor are that cost is generally higher as the technology is more expensive, and frame rates are typically not as high as rolling shutter cameras because the sensor requires more time to write the pixels to storage as all the data comes in simultaneously.

Last updated